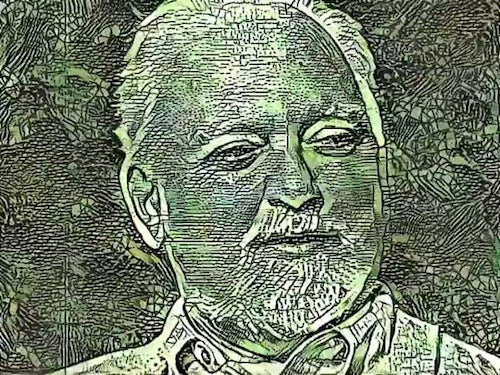

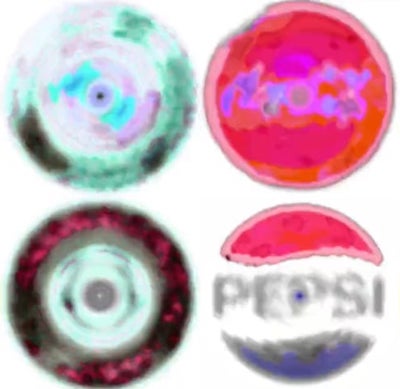

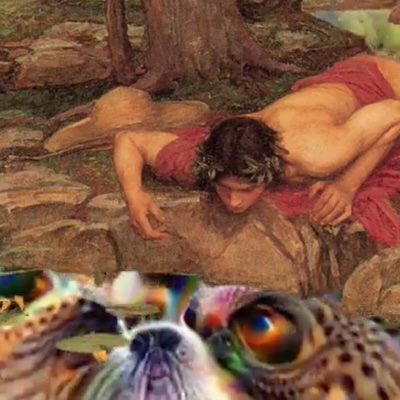

In the early days of what we now call AI, Google released an experimental programme called Deep Dream which could be run on most personal computers. While ostensibly a visualisation of how the algorithm was processing and categorising images, it had the byproduct of producing wildly psychedelic imagery which made it very popular with digital artists who called it the “puppy slug” aesthetic. I was not immune.

Like a lot of these early machine learning tools the biggest struggle was figuring out what to do with them, beyond generating mindless spectacle. This was one of my attempts.

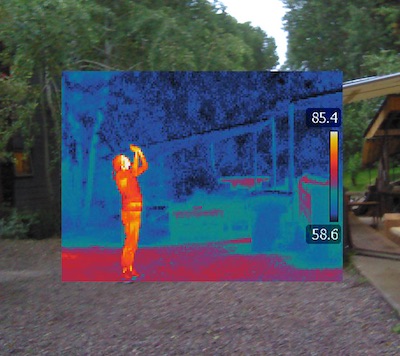

Even at this early stage it was clear these systems could only work with what they were given. Deep Dream interprets the world as mostly made up of animals and architecture because the training data available to it was mostly pictures of animals and architecture, so when asked to process something other than that it fails.

A crude reading of the myth of Narcissus has the young chap fall under the spell of his own image, unable to distinguish it from himself. I felt this might be relevant.

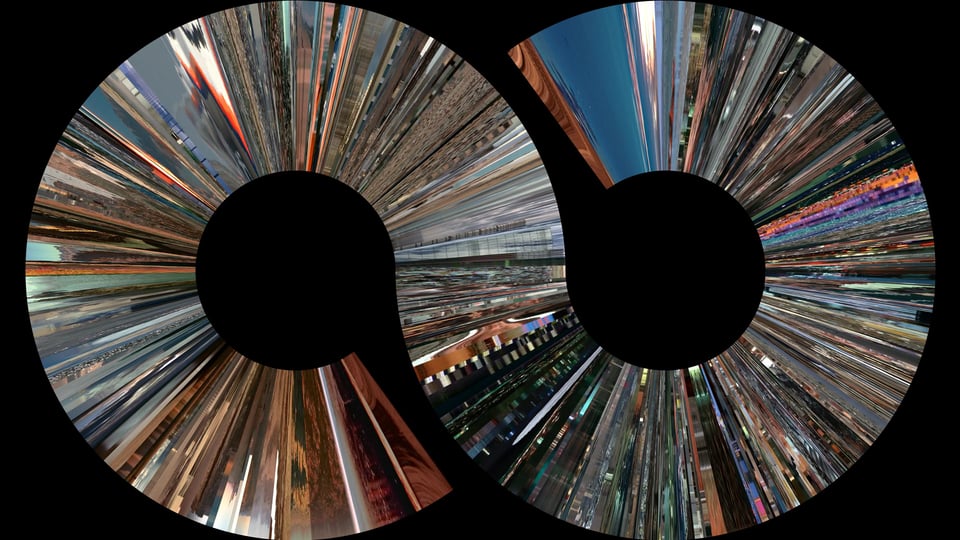

I took John William Waterhouse’s Echo and Narcissus and passed it through the Deep Dream programme. I then restored Narcissus himself.

Next I took a video scrolling through the layers of the Deep Dream programme that was widely shared at the time and superimposed the painting over it.

Finally I took Caravaggio’s darker Narcissus and processed the lower half of the painting.

A slight work, but I think it still holds up as a critique.