A Cargo Cult for Artificial Intelligence

Three months of new work production exploring human interactions with complex systems, centred around and lending its name to a wider Instructions for Humans exhibition exploring Internet art in an age of mass surveillance at BOM (Birmingham Open Media) from 13 September to 16 December 2017.

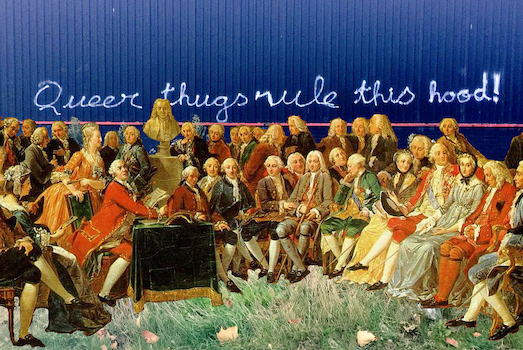

The phenomena of cargo cults was observed on South Pacific islands after WWII as islanders used ritual to try and make sense of the advanced military technology that had suddenly entered their lives. Instructions for Humans asks: what might a cargo cult for artificial intelligence look like?

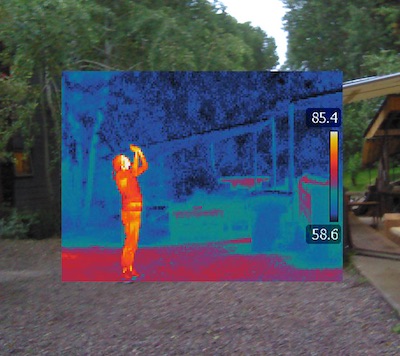

The work uses recent developments in artificial intelligence and machine learning tools to explore the systems that guide society and our relationship to them.

Work produced in the gallery was documented on the (now archived) website instructionsforhumans.com. Key works generated during the project are archived here:

- The Black Box - an installation being a metaphor for opaque systems.

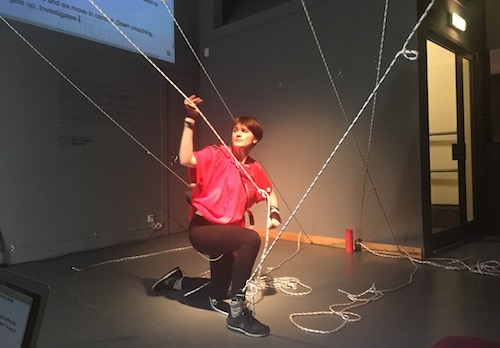

- Data Cult - collaborations with performance artists.

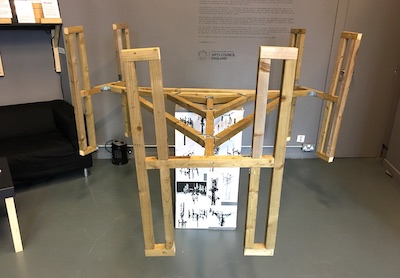

- Cell Tower Mast for Sympathetic Magic- a sculpture for the hurricane victims of Puerto Rico.

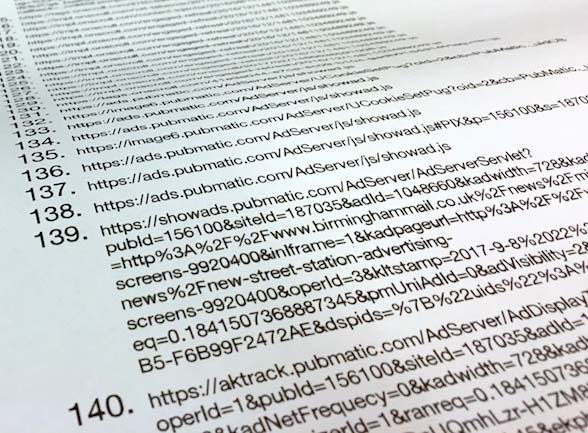

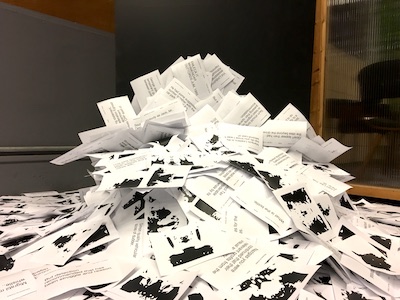

- 176 Birmingham Mail Website Trackers - an indictment of local media business models.

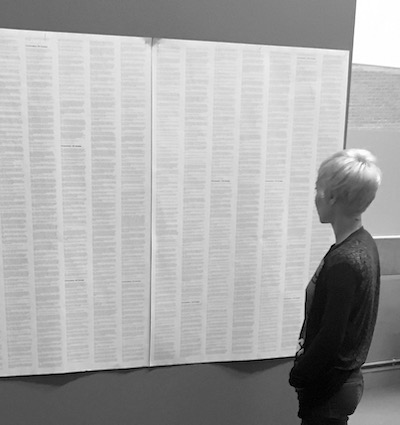

- Transcripts of Humans - conversations with the artist in the gallery, summarised and posted to the gallery wall.